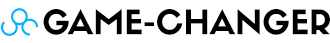

Generative AI is here to stay. And as has been the case in the past, it’s being driven by startups. The late adopters? Corporations. According to a Boston Consulting Group (BCG) survey of 2,000 global executives, more than 50% still discourage Generative AI adoption. Why?

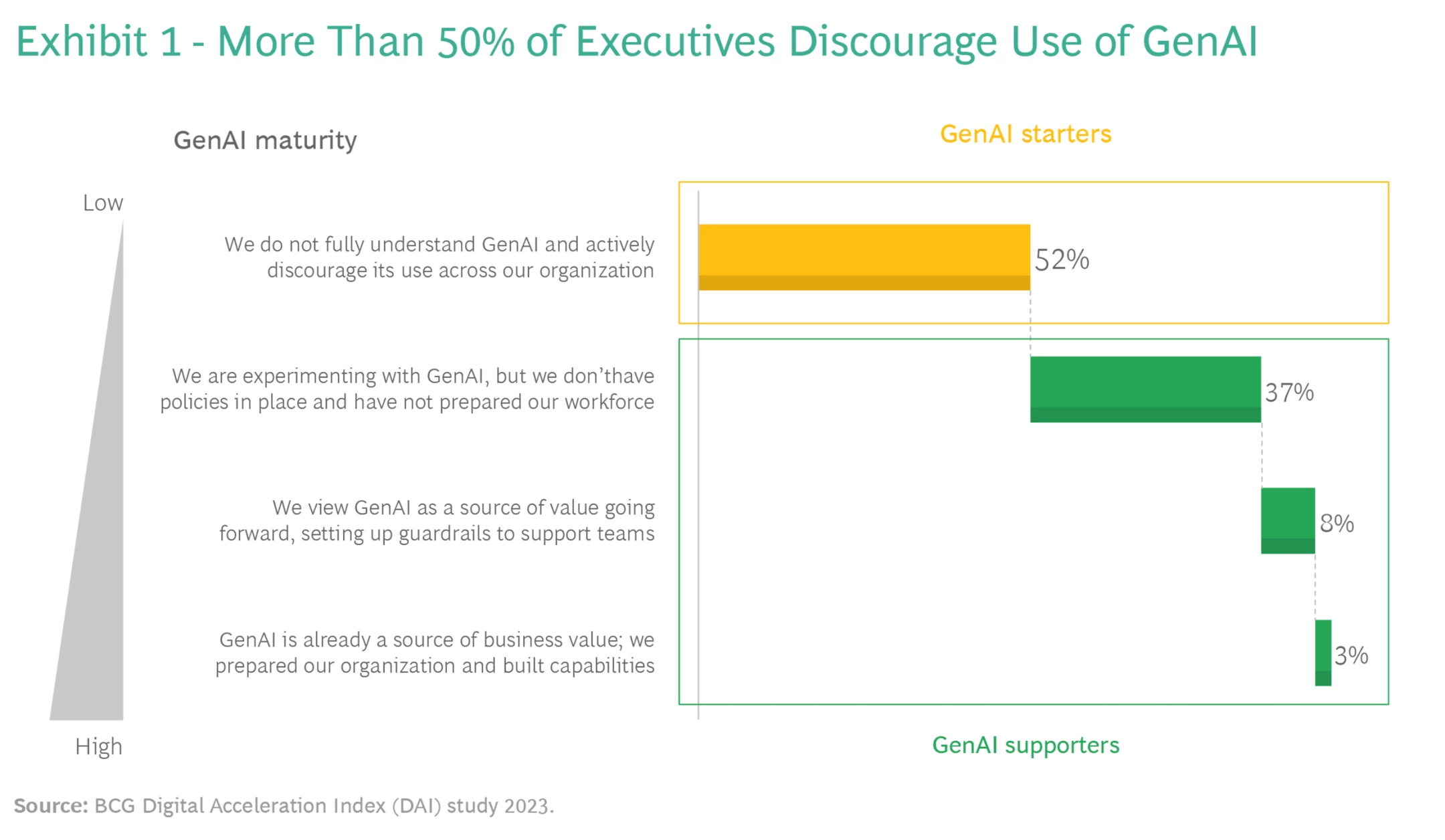

There isn’t one single reason, but many.

The real concerns should be about data privacy, hacking, and reverse engineering sensitive processes. If executives are worried about bad decisions or facts, they need to train employees how to identify facts and hire people who have better judgment; employees should have good judgment regardless of automated assistants and recommendations.

This speaks both to governance concerns and to talent and management skill concerns.

So, what do you do?

Below, I have a potential solution to each concern:

| CONCERNS | POTENTIAL SOLUTION |

| Limited traceability of sources | Implement AI systems with built-in source attribution, maintaining a clear data lineage and provenance. |

| Making factually wrong decisions | Pair AI decisions with human validation. Implement feedback loops for continuous learning and error rectification. |

| Compromised privacy of personal data | Apply data anonymization and encryption techniques. Implement data usage policies and obtain explicit user consent. |

| Increased risk of data breaches | Strengthen cybersecurity measures, conduct regular security audits, and use AI to detect and prevent intrusions. |

| Unreproducible outcomes | Use version control for AI models, maintain a log of all input data, and apply consistent training methodologies. |

| Higher vulnerability to hacking | Deploy AI-driven cybersecurity solutions, regularly update software, and invest in ethical hacking to find vulnerabilities. |

| Not being compliant with regulation | Regularly review AI systems against prevailing regulations, engage with legal teams, and conduct compliance audits. |

| Resistance in workforce | Organize AI awareness programs, invest in training and upskilling, and communicate the value of AI to the workforce. |

| Making biased decisions | Use diverse training data, conduct regular bias audits, and implement fairness checks within AI decision processes. |

| Potentially low ROI | Conduct pilot projects to measure value, frequently evaluate AI project outcomes against costs, and adjust strategies. |

| Increased carbon footprint | Optimize AI algorithms for efficiency, invest in sustainable and green computing infrastructure, and offset emissions. |

What do you think? What would you add?